PRINCIPLES OF CLINICAL PHARMACOLOGY : INTRODUCTION

Drugs are the cornerstone of modern therapeutics. Nevertheless, it is well recognized among physicians and among the lay community that the outcome of drug therapy varies widely among individuals. While this variability has been perceived as an unpredictable, and therefore inevitable, accompaniment of drug therapy, this is not the case. The goal of this chapter is to describe the principles of clinical pharmacology that can be used for the safe and optimal use of available and new drugs.

Drugs interact with specific target molecules to produce their beneficial and adverse effects. The chain of events between administration of a drug and production of these effects in the body can be divided into two components, both of which contribute to variability in drug actions. The first component comprises the processes that determine drug delivery to, and removal from, molecular targets. The resultant description of the relationship between drug concentration and time is termed pharmacokinetics. The second component of variability in drug action comprises the processes that determine variability in drug actions despite equivalent drug delivery to effector drug sites. This description of the relationship between drug concentration and effect is termed pharmacodynamics. As discussed further below, pharmacodynamic variability can arise as a result of variability in function of the target molecule itself or of variability in the broad biologic context in which the drug-target interaction occurs to achieve drug effects.

Two important goals of the discipline of clinical pharmacology are (1) to provide a description of conditions under which drug actions vary among human subjects; and (2) to determine mechanisms underlying this variability, with the goal of improving therapy with available drugs as well as pointing to new drug mechanisms that may be effective in the treatment of human disease. The first steps in the discipline were empirical descriptions of the influence of disease X on drug action Y or of individuals or families with unusual sensitivities to adverse drug effects. These important descriptive findings are now being replaced by an understanding of the molecular mechanisms underlying variability in drug actions. Thus, the effects of disease, drug coadministration, or familial factors in modulating drug action can now be reinterpreted as variability in expression or function of specific genes whose products determine pharmacokinetics and pharmacodynamics. Nevertheless, it is the personal interaction of the patient with the physician or other health care provider that first identifies unusual variability in drug actions; maintained alertness to unusual drug responses continues to be a key component of improving drug safety.

Unusual drug responses, segregating in families, have been recognized for decades and initially defined the field of pharmacogenetics. Now, with an increasing appreciation of common polymorphisms across the human genome, comes the opportunity to reinterpret descriptive mechanisms of variability in drug action as a consequence of specific DNA polymorphisms, or sets of DNA polymorphisms, among individuals. This approach defines the nascent field of pharmacogenomics, which may hold the opportunity of allowing practitioners to integrate a molecular understanding of the basis of disease with an individual's genomic makeup to prescribe personalized, highly effective, and safe therapies.

It is self-evident that the benefits of drug therapy should outweigh the risks. Benefits fall into two broad categories: those designed to alleviate a symptom and those designed to prolong useful life. An increasing emphasis on the principles of evidence-based medicine and techniques such as large clinical trials and meta-analyses have defined benefits of drug therapy in specific patient subgroups. Establishing the balance between risk and benefit is not always simple: for example, therapies that provide symptomatic benefits but shorten life may be entertained in patients with serious and highly symptomatic diseases such as heart failure or cancer. These decisions illustrate the continuing highly personal nature of the relationship between the prescriber and the patient.

Some adverse effects are so common and so readily associated with drug therapy that they are identified very early during clinical use of a drug. On the other hand, serious adverse effects may be sufficiently uncommon that they escape detection for many years after a drug begins to be widely used. The issue of how to identify rare but serious adverse effects (that can profoundly affect the benefit-risk perception in an individual patient) has not been satisfactorily resolved. Potential approaches range from an increased understanding of the molecular and genetic basis of variability in drug actions to expanded postmarketing surveillance mechanisms. None of these have been completely effective, so practitioners must be continuously vigilant to the possibility that unusual symptoms may be related to specific drugs, or combinations of drugs, that their patients receive.

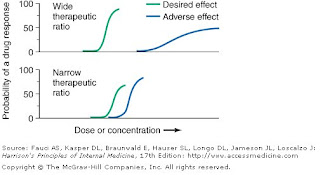

Beneficial and adverse reactions to drug therapy can be described by a series of dose-response relations (Fig. 1). Well-tolerated drugs demonstrate a wide margin, termed the therapeutic ratio, therapeutic index, or therapeutic window, between the doses required to produce a therapeutic effect and those producing toxicity. In cases where there is a similar relationship between plasma drug concentration and effects, monitoring plasma concentrations can be a highly effective aid in managing drug therapy by enabling concentrations to be maintained above the minimum required to produce an effect and below the concentration range likely to produce toxicity. Such monitoring has been widely used to guide therapy with specific agents, such as certain antiarrhythmics, anticonvulsants, and antibiotics. Many of the principles in clinical pharmacology and examples outlined below, which can be applied broadly to therapeutics, have been developed in these arenas.

Fig.1.The concept of a therapeutic ratio. Each panel illustrates the relationship between increasing dose and cumulative probability of a desired or adverse drug effect. Top. A drug with a wide therapeutic ratio, i.e., a wide separation of the two curves. Bottom. A drug with a narrow therapeutic ratio; here, the likelihood of adverse effects at therapeutic doses is increased because the curves are not well separated. Further, a steep dose-response curve for adverse effects is especially undesirable, as it implies that even small dosage increments may sharply increase the likelihood of toxicity. When there is a definable relationship between drug concentration (usually measured in plasma) and desirable and adverse effect curves, concentration may be substituted on the abscissa. Note that not all patients necessarily demonstrate a therapeutic response (or adverse effect) at any dose, and that some effects (notably some adverse effects) may occur in a dose-independent fashion. (click image to enlarge)

Principles of Pharmacokinetics

The processes of absorption, distribution, metabolism, and excretion—collectively termed drug disposition—determine the concentration of drug delivered to target effector molecules.

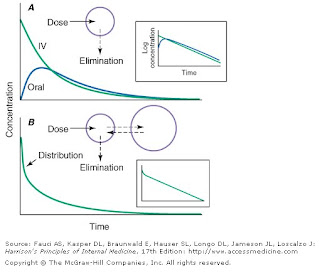

When a drug is administered orally, subcutaneously, intramuscularly, rectally, sublingually, or directly into desired sites of action, the amount of drug actually entering the systemic circulation may be less than with the intravenous route (Fig. 2A ). The fraction of drug available to the systemic circulation by other routes is termed bioavailability. Bioavailability may be <100%>

Fig.2. Idealized time-plasma concentration curves after a single dose of drug. A. The time course of drug concentration after an instantaneous IV bolus or an oral dose in the one-compartment model shown. The area under the time-concentration curve is clearly less with the oral drug than the IV, indicating incomplete bioavailability. Note that despite this incomplete bioavailability, concentration after the oral dose can be higher than after the IV dose at some time points. The inset shows that the decline of concentrations over time is linear on a log-linear plot, characteristic of first-order elimination, and that oral and IV drug have the same elimination (parallel) time course. B. The decline of central compartment concentration when drug is distributed both to and from a peripheral compartment and eliminated from the central compartment. The rapid initial decline of concentration reflects not drug elimination but distribution. (click image to enlarge)

When a drug is administered by a nonintravenous route, the peak concentration occurs later and is lower than after the same dose given by rapid intravenous injection, reflecting absorption from the site of administration (Fig. 2). The extent of absorption may be reduced because a drug is incompletely released from its dosage form, undergoes destruction at its site of administration, or has physicochemical properties such as insolubility that prevent complete absorption from its site of administration. Slow absorption is deliberately designed into "slow-release" or "sustained-release" drug formulations in order to minimize variation in plasma concentrations during the interval between doses.

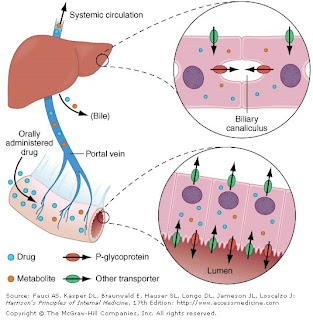

When a drug is administered orally, it must transverse the intestinal epithelium, the portal venous system, and the liver prior to entering the systemic circulation (Fig. 3). Once a drug enters the enterocyte, it may undergo metabolism, be transported into the portal vein, or undergo excretion back into the intestinal lumen. Both excretion into the intestinal lumen and metabolism decrease systemic bioavailability. Once a drug passes this enterocyte barrier, it may also be taken up into the hepatocyte, where bioavailability can be further limited by metabolism or excretion into the bile. This elimination in intestine and liver, which reduces the amount of drug delivered to the systemic circulation, is termed presystemic elimination, or first-pass elimination.

Fig.3. Mechanism of presystemic clearance. After drug enters the enterocyte, it can undergo metabolism, excretion into the intestinal lumen, or transport into the portal vein. Similarly, the hepatocyte may accomplish metabolism and biliary excretion prior to the entry of drug and metabolites to the systemic circulation. [Adapted by permission from DM Roden, in DP Zipes, J Jalife (eds): Cardiac Electrophysiology: From Cell to Bedside, 4th ed.

Drug movement across the membrane of any cell, including enterocytes and hepatocytes, is a combination of passive diffusion and active transport, mediated by specific drug uptake and efflux molecules. The drug transport molecule that has been most widely studied is P-glycoprotein, the product of the normal expression of the MDR1 gene. P-glycoprotein is expressed on the apical aspect of the enterocyte and on the canalicular aspect of the hepatocyte (Fig. 3); in both locations, it serves as an efflux pump, thus limiting availability of drug to the systemic circulation. P-glycoprotein is also an important component of the blood-brain barrier, discussed further below.

Drug metabolism generates compounds that are usually more polar and hence more readily excreted than parent drug. Metabolism takes place predominantly in the liver but can occur at other sites such as kidney, intestinal epithelium, lung, and plasma. "Phase I" metabolism involves chemical modification, most often oxidation accomplished by members of the cytochrome P450 (CYP) monooxygenase superfamily. CYPs that are especially important for drug metabolism (Table 1) include CYP3A4, CYP3A5, CYP2D6, CYP2C9, CYP2C19, CYP1A2, and CYP2E1, and each drug may be a substrate for one or more of these enzymes. "Phase II" metabolism involves conjugation of specific endogenous compounds to drugs or their metabolites. The enzymes that accomplish phase II reactions include glucuronyl-, acetyl-, sulfo- and methyltransferases. Drug metabolites may exert important pharmacologic activity, as discussed further below.

| | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| aInhibitors affect the molecular pathway, and thus may affect substrate. bClinically important genetics variants described. A listing of CYP substrates, inhibitors, and inducers is maintained at http://medicine.iupui.edu/flockhart/table.htm. |

Clinical Implications of Altered Bioavailability

Some drugs undergo near-complete presystemic metabolism and thus cannot be administered orally. Nitroglycerin cannot be used orally because it is completely extracted prior to reaching the systemic circulation. The drug is therefore used by the sublingual or transdermal routes, which bypass presystemic metabolism.

Some drugs with very extensive presystemic metabolism can still be administered by the oral route, using much higher doses than those required intravenously. Thus, a typical intravenous dose of verapamil is 1–5 mg, compared to the usual single oral dose of 40–120 mg. Administration of low-dose aspirin can result in exposure of cyclooxygenase in platelets in the portal vein to the drug, but systemic sparing because of first-pass aspirin deacylation in the liver. This is an example of presystemic metabolism being exploited to therapeutic advantage.

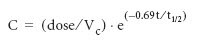

Most pharmacokinetic processes are first-order; i.e., the rate of the process depends on the amount of drug present. Clinically important exceptions are discussed below (see "Principles of Dose Selection"). In the simplest pharmacokinetic model (Fig. 2A), a drug bolus is administered instantaneously to a central compartment, from which drug elimination occurs as a first-order process. The first-order nature of drug elimination leads directly to the relationship describing drug concentration (C) at any time (t) following the bolus:

where Vc is the volume of the compartment into which drug is delivered and t1/2 is elimination half-life. As a consequence of this relationship, a plot of the logarithm of concentration vs time is a straight line (Fig. 2A , inset). Half-life is the time required for 50% of a first-order process to be complete. Thus, 50% of drug elimination is accomplished after one drug-elimination half-life, 75% after two, 87.5% after three, etc. In practice, first-order processes such as elimination are near-complete after four–five half-lives.

In some cases, drug is removed from the central compartment not only by elimination but also by distribution into peripheral compartments. In this case, the plot of plasma concentration vs time after a bolus may demonstrate two (or more) exponential components (Fig. 2B ). In general, the initial rapid drop in drug concentration represents not elimination but drug distribution into and out of peripheral tissues (also first-order processes), while the slower component represents drug elimination; the initial precipitous decline is usually evident with administration by intravenous but not other routes. Drug concentrations at peripheral sites are determined by a balance between drug distribution to and redistribution from peripheral sites, as well as by elimination. Once the distribution process is near-complete (four to five distribution half-lives), plasma and tissue concentrations decline in parallel.

Clinical Implications of Half-Life Measurements

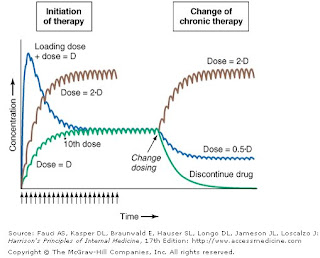

The elimination half-life not only determines the time required for drug concentrations to fall to near-immeasurable levels after a single bolus; it is also the key determinant of the time required for steady-state plasma concentrations to be achieved after any change in drug dosing (Fig. 4). This applies to the initiation of chronic drug therapy (whether by multiple oral doses or by continuous intravenous infusion), a change in chronic drug dose or dosing interval, or discontinuation of drug.

Fig..4. Drug accumulation to steady state. In this simulation, drug was administered (arrows) at intervals = 50% of the elimination half-life. Steady state is achieved during initiation of therapy after ~5 elimination half-lives, or 10 doses. A loading dose did not alter the eventual steady state achieved. A doubling of the dose resulted in a doubling of the steady state but the same time course of accumulation. Once steady state is achieved, a change in dose (increase, decrease, or drug discontinuation) results in a new steady state in ~5 elimination half-lives. [Adapted by permission from DM Roden, in DP Zipes, J Jalife (eds): Cardiac Electrophysiology: From Cell to Bedside, 4th ed.

Steady state describes the situation during chronic drug administration when the amount of drug administered per unit time equals drug eliminated per unit time. With a continuous intravenous infusion, plasma concentrations at steady state are stable, while with chronic oral drug administration, plasma concentrations vary during the dosing interval but the time-concentration profile between dosing intervals is stable (Fig. 4).

In a typical 70-kg human, plasma volume is ~3 L, blood volume is ~5.5 L, and extracellular water outside the vasculature is ~42 L. The volume of distribution of drugs extensively bound to plasma proteins but not to tissue components approaches plasma volume; warfarin is an example. By contrast, for drugs highly bound to tissues, the volume of distribution can be far greater than any physiologic space. For example, the volume of distribution of digoxin and tricyclic antidepressants is hundreds of liters, obviously exceeding total-body volume. Such drugs are not readily removed by dialysis, an important consideration in overdose.

Clinical Implications of Drug Distribution

Digoxin accesses its cardiac site of action slowly, over a distribution phase of several hours. Thus, after an intravenous dose, plasma levels fall, but those at the site of action increase over hours. Only when distribution is near-complete does the concentration of digoxin in plasma reflect pharmacologic effect. For this reason, there should be a 6–8 h wait after administration before plasma levels of digoxin are measured as a guide to therapy.

Animal models have suggested, and clinical studies are confirming, that limited drug penetration into the brain, the "blood-brain barrier," often represents a robust P-glycoprotein–mediated efflux process from capillary endothelial cells in the cerebral circulation. Thus, drug distribution into the brain may be modulated by changes in P-glycoprotein function.

For some drugs, the indication may be so urgent that the time required to achieve steady-state concentrations may be too long. Under these conditions, administration of "loading" dosages may result in more rapid elevations of drug concentration to achieve therapeutic effects earlier than with chronic maintenance therapy (Fig. 4). Nevertheless, the time required for true steady state to be achieved is still determined only by elimination half-life. This strategy is only appropriate for drugs exhibiting a defined relationship between drug dose and effect.

Disease can alter loading requirements: in congestive heart failure, the central volume of distribution of lidocaine is reduced. Therefore, lower-than-normal loading regimens are required to achieve equivalent plasma drug concentrations and to avoid toxicity.

Rate of Intravenous Administration

Although the simulations in Fig. 2 use a single intravenous bolus, this is very rarely appropriate in practice because side effects related to transiently very high concentrations can result. Rather, drugs are more usually administered orally or as a slower intravenous infusion. Some drugs are so predictably lethal when infused too rapidly that special precautions should be taken to prevent accidental boluses. For example, solutions of potassium for intravenous administration >20 meq/L should be avoided in all but the most exceptional and carefully monitored circumstances. This minimizes the possibility of cardiac arrest due to accidental increases in infusion rates of more concentrated solutions.

While excessively rapid intravenous drug administration can lead to catastrophic consequences, transiently high drug concentrations after intravenous administration can occasionally be used to advantage. The use of midazolam for intravenous sedation, for example, depends upon its rapid uptake by the brain during the distribution phase to produce sedation quickly, with subsequent egress from the brain during the redistribution of the drug as equilibrium is achieved.

Similarly, adenosine must be administered as a rapid bolus in the treatment of reentrant supraventricular tachycardias to prevent elimination by very rapid (t1/2 of seconds) uptake into erythrocytes and endothelial cells before the drug can reach its clinical site of action, the atrioventricular node.

Many drugs circulate in the plasma partly bound to plasma proteins. Since only unbound (free) drug can distribute to sites of pharmacologic action, drug response is related to the free rather than the total circulating plasma drug concentration.

Clinical Implications of Altered Protein Binding

For drugs that are normally highly bound to plasma proteins (>90%), small changes in the extent of binding (e.g., due to disease) produce a large change in the amount of unbound drug, and hence drug effect. The acute-phase reactant α1-acid glycoprotein binds to basic drugs, such as lidocaine or quinidine, and is increased in a range of common conditions, including myocardial infarction, surgery, neoplastic disease, rheumatoid arthritis, and burns. This increased binding can lead to reduced pharmacologic effects at therapeutic concentrations of total drug. Conversely, conditions such as hypoalbuminemia, liver disease, and renal disease can decrease the extent of drug binding, particularly of acidic and neutral drugs, such as phenytoin. Here, plasma concentration of free drug is increased, so drug efficacy and toxicity are enhanced if total (free + bound) drug concentration is used to monitor therapy.

When drug is eliminated from the body, the amount of drug in the body declines over time. An important approach to quantifying this reduction is to consider that drug concentration at the beginning and end of a time period are unchanged, and that a specific volume of the body has been "cleared" of the drug during that time period. This defines clearance as volume/time. Clearance includes both drug metabolism and excretion.

Clinical Implications of Altered Clearance

While elimination half-life determines the time required to achieve steady-state plasma concentrations (Css), the magnitude of that steady state is determined by clearance (Cl) and dose alone. For a drug administered as an intravenous infusion, this relationship is

Css = dosing rate/Cl or dosing rate = Cl X Css

When drug is administered orally, the average plasma concentration within a dosing interval (Cavg,ss) replaces Css, and bioavailability (F) must be included:

F X Dosing rate = Cl X Cavg,ss

Genetic variants, drug interactions, or diseases that reduce the activity of drug-metabolizing enzymes or excretory mechanisms may lead to decreased clearance and hence a requirement for downward dose adjustment to avoid toxicity. Conversely, some drug interactions and genetic variants increase CYP expression, and hence increased drug dosage may be necessary to maintain a therapeutic effect.

The Concept of High-Risk Pharmacokinetics

When drugs utilize a single pathway exclusively for elimination, any condition that inhibits that pathway (be it disease-related, genetic, or due to a drug interaction) can lead to dramatic changes in drug concentrations and thus increase the risk of concentration-related drug toxicity. For example, administration of drugs that inhibit P-glycoprotein reduces digoxin clearance, since P-glycoprotein is the major mediator of digoxin elimination; the risk of digoxin toxicity is high with this drug interaction unless digoxin dosages are reduced. Conversely, when drugs undergo elimination by multiple drug metabolizing or excretory pathways, absence of one pathway (due to a genetic variant or drug interaction) is much less likely to have a large impact on drug concentrations or drug actions.

From an evolutionary point of view, drug metabolism probably developed as a defense against noxious xenobiotics (foreign substances, e.g., from plants) to which our ancestors inadvertently exposed themselves. The organization of the drug uptake and efflux pumps and the location of drug metabolism in the intestine and liver prior to drug entry to the systemic circulation (Fig. 3) support this idea of a primitive protective function.

However, drug metabolites are not necessarily pharmacologically inactive. Metabolites may produce effects similar to, overlapping with, or distinct from those of the parent drug. For example, N-acetylprocainamide (

Principles of Pharmacodynamics

Once a drug accesses a molecular site of action, it alters the function of that molecular target, with the ultimate result of a drug effect that the patient or physician can perceive. For drugs used in the urgent treatment of acute symptoms, little or no delay is anticipated (or desired) between the drug-target interaction and the development of a clinical effect. Examples of such acute situations include vascular thrombosis, shock, malignant hypertension, or status epilepticus.

For many conditions, however, the indication for therapy is less urgent, and a delay between the interaction of a drug with its pharmacologic target(s) and a clinical effect is common. Pharmacokinetic mechanisms that can contribute to such a delay include uptake into peripheral compartments or accumulation of active metabolites. Commonly, the clinical effect develops as a downstream consequence of the initial molecular effect the drug produces. Thus, administration of a proton-pump inhibitor or an H2-receptor blocker produces an immediate increase in gastric pH but ulcer healing that is delayed. Cancer chemotherapy inevitably produces delayed therapeutic effects, often long after drug is undetectable in plasma and tissue. Translation of a molecular drug action to a clinical effect can thus be highly complex and dependent on the details of the pathologic state being treated. These complexities have made pharmacodynamics and its variability less amenable than pharmacokinetics to rigorous mathematical analysis. Nevertheless, some clinically important principles can be elucidated.

A drug effect often depends on the presence of underlying pathophysiology. Thus, a drug may produce no action or a different spectrum of actions in unaffected individuals compared to patients. Further, concomitant disease can complicate interpretation of response to drug therapy, especially adverse effects. For example, high doses of anticonvulsants such as phenytoin may cause neurologic symptoms, which may be confused with the underlying neurologic disease. Similarly, increasing dyspnea in a patient with chronic lung disease receiving amiodarone therapy could be due to drug, underlying disease, or an intercurrent cardiopulmonary problem. Thus the presence of chronic lung disease may alter the risk-benefit ratio in a specific patient to argue against the use of amiodarone.

Principles of Dose Selection

The desired goal of therapy with any drug is to maximize the likelihood of a beneficial effect while minimizing the risk of adverse effects. Previous experience with the drug, in controlled clinical trials or in postmarketing use, defines the relationships between dose (or plasma concentration) and these dual effects and provides a starting point for initiation of drug therapy.

Figure 5-1 illustrates the relationships among dose, plasma concentrations, efficacy, and adverse effects and carries with it several important implications:

1. The target drug effect should be defined when drug treatment is started. With some drugs, the desired effect may be difficult to measure objectively, or the onset of efficacy can be delayed for weeks or months; drugs used in the treatment of cancer and psychiatric disease are examples. Sometimes a drug is used to treat a symptom, such as pain or palpitations, and here it is the patient who will report whether the selected dose is effective. In yet other settings, such as anticoagulation or hypertension, the desired response is more readily measurable.

2. The nature of anticipated toxicity often dictates the starting dose. If side effects are minor, it may be acceptable to start at a dose highly likely to achieve efficacy and downtitrate if side effects occur. However, this approach is rarely if ever justified if the anticipated toxicity is serious or life-threatening; in this circumstance, it is more appropriate to initiate therapy with the lowest dose that may produce a desired effect.

3. The above considerations do not apply if these relationships between dose and effects cannot be defined. This is especially relevant to some adverse drug effects (discussed in further detail below) whose development is not readily related to drug dose.

4. If a drug dose does not achieve its desired effect, a dosage increase is justified only if toxicity is absent and the likelihood of serious toxicity is small. For example, a small percentage of patients with strong seizure foci require plasma levels of phenytoin >20 µg/mL to control seizures. Dosages to achieve this effect may be appropriate, if tolerated. Conversely, clinical experience with flecainide suggests that levels >1000 ng/mL, or dosages >400 mg/d, may be associated with an increased risk of sudden death; thus dosage increases beyond these limits are ordinarily not appropriate, even if the higher dosage appears tolerated.

Other mechanisms that can lead to failure of drug effect should also be considered; drug interactions and noncompliance are common examples. This is one situation in which measurement of plasma drug concentrations, if available, can be especially useful. Noncompliance is an especially frequent problem in the long-term treatment of diseases such as hypertension and epilepsy, occurring in >25% of patients in therapeutic environments in which no special effort is made to involve patients in the responsibility for their own health. Multidrug regimens with multiple doses per day are especially prone to noncompliance.

Monitoring response to therapy, by physiologic measures or by plasma concentration measurements, requires an understanding of the relationships between plasma concentration and anticipated effects. For example, measurement of QT interval is used during treatment with sotalol or dofetilide to avoid marked QT prolongation that can herald serious arrhythmias. In this setting, evaluating the electrocardiogram at the time of anticipated peak plasma concentration and effect (e.g., 1–2 h postdose at steady state) is most appropriate. Maintained high aminoglycoside levels carry a risk of nephrotoxicity, so dosages should be adjusted on the basis of plasma concentrations measured at trough (predose). On the other hand, ensuring aminoglycoside efficacy is accomplished by adjusting dosage so that peak drug concentrations are above a minimal antibacterial concentration. For dose adjustment of other drugs (e.g., anticonvulsants), concentration should be measured at its lowest during the dosing interval, just prior to a dose at steady state (Fig. 4), to ensure a maintained therapeutic effect.

Concentration of Drugs in Plasma as a Guide to Therapy

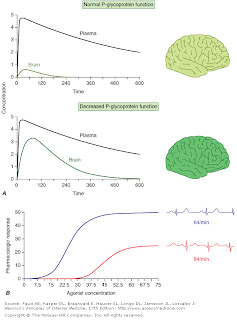

Factors such as interactions with other drugs, disease-induced alterations in elimination and distribution, and genetic variation in drug disposition combine to yield a wide range of plasma levels in patients given the same dose. Hence, if a predictable relationship can be established between plasma drug concentration and beneficial or adverse drug effect, measurement of plasma levels can provide a valuable tool to guide selection of an optimal dose. This is particularly true when there is a narrow range between the plasma levels yielding therapeutic and adverse effects, as with digoxin, theophylline, some antiarrhythmics, aminoglycosides, cyclosporine, and anticonvulsants. On the other hand, if drug access to important sites of action outside plasma is highly variable, monitoring plasma concentration may not provide an accurate guide to therapy (Fig. 5A )

Fig.5. A. The efflux pump P-glycoprotein excludes drugs from the endothelium of capillaries in the brain, and so constitutes a key element of the blood-brain barrier. Thus, reduced P-glycoprotein function (e.g., due to drug interactions or genetically determined variability in gene transcription) increases penetration of substrate drugs into the brain, even when plasma concentrations are unchanged. B. The graph shows an effect of a β1-receptor polymorphism on receptor function in vitro. Patients with the hypofunctional variant may display greater heart-rate slowing or blood pressure lowering on exposure to receptor blocking agents. (click image to enlarge)

The common situation of first-order elimination implies that average, maximum, and minimum steady-state concentrations are related linearly to the dosing rate. Accordingly, the maintenance dose may be adjusted on the basis of the ratio between the desired and measured concentrations at steady state; for example, if a doubling of the steady-state plasma concentration is desired, the dose should be doubled. In some cases, elimination becomes saturated at high doses, and the process then occurs at a fixed amount per unit time (zero order). For drugs with this property (e.g., phenytoin and theophylline), plasma concentrations change disproportionately more than the alteration in the dosing rate. In this situation, changes in dose should be small to minimize the degree of unpredictability, and plasma concentration monitoring should be used to ensure that dose modification achieves the desired level.

Determination of Maintenance Dose

An increase in dosage is usually best achieved by changing the drug dose but not the dosing interval, e.g., by giving 200 mg every 8 h instead of 100 mg every 8 h. However, this approach is acceptable only if the resulting maximum concentration is not toxic and the trough value does not fall below the minimum effective concentration for an undesirable period of time. Alternatively, the steady state may be changed by altering the frequency of intermittent dosing but not the size of each dose. In this case, the magnitude of the fluctuations around the average steady-state level will change—the shorter the dosing interval, the smaller the difference between peak and trough levels.

EFFECTS OF DISEASE ON DRUG CONCENTRATION AND RESPONSE

Renal excretion of parent drug and metabolites is generally accomplished by glomerular filtration and by specific drug transporters, only now being identified. If a drug or its metabolites are primarily excreted through the kidneys and increased drug levels are associated with adverse effects, drug dosages must be reduced in patients with renal dysfunction to avoid toxicity. The antiarrhythmics dofetilide and sotalol undergo predominant renal excretion and carry a risk of QT prolongation and arrhythmias if doses are not reduced in renal disease. Thus, in end-stage renal disease, sotalol can be given as 40 mg after dialysis (every second day), compared to the usual daily dose, 80–120 mg every 12 h. The narcotic analgesic meperidine undergoes extensive hepatic metabolism, so that renal failure has little effect on its plasma concentration. However, its metabolite, normeperidine, does undergo renal excretion, accumulates in renal failure, and probably accounts for the signs of central nervous system excitation, such as irritability, twitching, and seizures, that appear when multiple doses of meperidine are administered to patients with renal disease. Protein binding of some drugs (e.g., phenytoin) may be altered in uremia, so measuring free drug concentration may be desirable.

In non-end-stage renal disease, changes in renal drug clearance are generally proportional to those in creatinine clearance, which may be measured directly or estimated from the serum creatinine. This estimate, coupled with the knowledge of how much drug is normally excreted renally vs nonrenally, allows an estimate of the dose adjustment required. In practice, most decisions involving dosing adjustment in patients with renal failure use published recommended adjustments in dosage or dosing interval based on the severity of renal dysfunction indicated by creatinine clearance. Any such modification of dose is a first approximation and should be followed by plasma concentration data (if available) and clinical observation to further optimize therapy for the individual patient.

In contrast to the predictable decline in renal clearance of drugs in renal insufficiency, the effects of diseases like hepatitis or cirrhosis on drug disposition range from impaired to increased drug clearance, in an unpredictable fashion. Standard tests of liver function are not useful in adjusting doses. First-pass metabolism may decrease—and thus oral bioavailability increases—as a consequence of disrupted hepatocyte function, altered liver architecture, and portacaval shunts. The oral availability for high-first-pass drugs such as morphine, meperidine, midazolam, and nifedipine is almost doubled in patients with cirrhosis, compared to those with normal liver function. Therefore the size of the oral dose of such drugs should be reduced in this setting.

Under conditions of decreased tissue perfusion, the cardiac output is redistributed to preserve blood flow to the heart and brain at the expense of other tissues. As a result, drugs may be distributed into a smaller volume of distribution, higher drug concentrations will be present in the plasma, and the tissues that are best perfused (the brain and heart) will be exposed to these higher concentrations. If either the brain or heart is sensitive to the drug, an alteration in response will occur. As well, decreased perfusion of the kidney and liver may impair drug clearance. Thus, in severe congestive heart failure, in hemorrhagic shock, and in cardiogenic shock, response to usual drug doses may be excessive, and dosage reduction may be necessary. For example, the clearance of lidocaine is reduced by about 50% in heart failure, and therapeutic plasma levels are achieved at infusion rates of 50% or less than those usually required. The volume of distribution of lidocaine is also reduced, so loading regimens should be reduced.

In the elderly, multiple pathologies and medications used to treat them result in more drug interactions and adverse effects. Aging also results in changes in organ function, especially of the organs involved in drug disposition. Initial doses should be less than the usual adult dosage and should be increased slowly. The number of medications, and doses per day, should be kept as low as possible.

Even in the absence of kidney disease, renal clearance may be reduced by 35–50% in elderly patients. Dosage adjustments are therefore necessary for drugs that are eliminated mainly by the kidneys. Because muscle mass and therefore creatinine production are reduced in older individuals, a normal serum creatinine concentration can be present even though creatinine clearance is impaired; dosages should be adjusted on the basis of creatinine clearance, as discussed above. Aging also results in a decrease in the size of, and blood flow to, the liver and possibly in the activity of hepatic drug-metabolizing enzymes; accordingly, the hepatic clearance of some drugs is impaired in the elderly. As with liver disease, these changes are not readily predicted.

Elderly patients may display altered drug sensitivity. Examples include increased analgesic effects of opioids, increased sedation from benzodiazepines and other CNS depressants, and increased risk of bleeding while receiving anticoagulant therapy, even when clotting parameters are well controlled. Exaggerated responses to cardiovascular drugs are also common because of the impaired responsiveness of normal homeostatic mechanisms. Conversely, the elderly display decreased sensitivity to β-adrenergic receptor blockers.

1 comments:

I was diagnosed as HEPATITIS B carrier in 2013 with fibrosis of the

liver already present. I started on antiviral medications which

reduced the viral load initially. After a couple of years the virus

became resistant. I started on HEPATITIS B Herbal treatment from

ULTIMATE LIFE CLINIC (www.ultimatelifeclinic.com) in March, 2020. Their

treatment totally reversed the virus. I did another blood test after

the 6 months long treatment and tested negative to the virus. Amazing

treatment! This treatment is a breakthrough for all HBV carriers.

Post a Comment